Color Management across Apple Frameworks. Part 3: Color Space in Image-Specific APIs

This article is the third part of this series. The following content will focus on the Color Space in the Image-Specific APIs in the Apple Framework. It’s recommended to read the first two parts of this series first.

Color Space in Image-Specific APIs

We have covered the Color-Specific APIs in the last section. In the following content, we will talk about the Color Space in Image-Specific APIs.

Like the colors-specific APIs mentioned above, you can get the Color Space properties directly and indirectly in some Image-Specific classes. Images are usually the objects we are dealing with when it comes to image processing, like setting up image processing pipeline using Core Image, drawing your custom content using Core Graphics, and diving directly into Metal to process and display images.

You may still wonder how the Color Spaces affect how we process images when using those frameworks and APIs. Image processing involves mathematical operations on each pixel. On this level, you are dealing with data/numbers.

But what the numbers mean could affect your algorithm when dealing with them; for example, doubling the values of a gamma-encoded pixel doesn’t mean doubling the brightness, which should work only for the linear working space.

Moreover, there are some APIs that handle Color Management automatically for you, and the auto Color Management only works if the input and output sources have the correct color space tagging.

There is no Color Space information in the top-level APIs like UIImage and NSImage, for they are just wrappers and entry-level APIs for image processing or displaying. Color Spaces information can be found in those following frameworks and APIs.

Core Video

Regarding image processing in Core Video Framework, CVPixelBuffer is the most used API. You should have seen this class when setting up a camera session to get image preview buffers and process them before displaying them to users. CVPixelBuffer is an alias to CVImageBuffer, which stores pixel buffer of an image. Unlike the bitmap Data that comprises mere bitmap data like RGBA pixel values, CVPixelBuffer stores some other information about the image buffer, like the dimensions and color space attributes in its attachments.

You create or get a CVPixelBuffer in those ways:

Use

CVPixelBufferCreatemethod to create an empty single pixel buffer for a given size and pixel format.Create Core Graphics’s

CGContextwith an unsafe pointer to aCVPixelBufferand then draw content onto it.Use Core Image’s render method to render a

CIImageto aCVPixelBuffer.When you receive the callback from camera specific API like

AVCaptureDataOutputSynchronizerDelegate, you can get theCMSampleBufferand then get theCVPixelBufferusingCMSampleBufferGetImageBuffer(_:)method.

There is no such a ColorSpace property for CVPixelBuffer. Instead, the attributes of a Color Space can be stored in the attachments of a CVPixelBuffer. You can think of attachments as the metadata of a CVPixelBuffer.

To get attachments from a CVPixelBuffer, you use the CVBufferCopyAttachments(_:_:) method.

The Attachments object retrieved above is an instance of CFDictionary , and you can cast it to Dictionary<String, Any> using toll-free bridging. If you have tried examining the attachments of the CVPixelBuffer from the camera session, you can notice that there are a few attachments regarding the Color Space: kCVImageBufferColorPrimariesKey and kCVImageBufferTransferFunctionKey.

kCVImageBufferColorPrimariesKey is the key to the value of Color Primaries of a Color Space, the possible values are:

kCVImageBufferColorPrimaries_ITU_R_709_2

kCVImageBufferColorPrimaries_P3_D65

kCVImageBufferColorPrimaries_DCI_P3

…

The kCVImageBufferTransferFunctionKey is the key to the value of Transfer Function of a Color Space. The transfer function describes the tonality of an image for use in color matching operations, along with a color primaries gamut. The possible values are:

kCVImageBufferTransferFunction_ITU_R_709_2

kCVImageBufferTransferFunction_sRGB

kCVImageBufferTransferFunction_ITU_R_2100_HLG

…

To represent the Display P3 Color Space, you can construct the attachments containing the following key-value pairs:

Then you can use CVBufferSetAttachments(_:_:_:) method to set the attachments to the CVPixelBuffer.

The values of kCVImageBufferColorPrimariesKey and kCVImageBufferTransferFunctionKey in a way affect how a CGColorSpace is created for some higher-level API like CIImage. If you prefer, you can create a CGColorSpace using the CVImageBufferCreateColorSpaceFromAttachments(_:) method.

The returned value of

CVImageBufferCreateColorSpaceFromAttachments(_:)is anUnmanaged<CGColorSpace>?, which requires you to claim your ownership by taking its retained value and then releasing it when it’s no longer needed (iftakeRetainedValueis used to get the Swift-Managed object, the releasing is done by Swift though, see this for more information).⚠️ The attachments don’t necessarily determine the actual Color Space of a

CVPixelBuffer. For example, if aCVPixelBufferis a type ofkCVPixelFormatType_DisparityFloat32, then its Color Space will always be treated as Extended Linear Gray when aCIImageis constructed from it, even if you manually attach the color attributes of sRGB to it, since depth or disparity data should only contain monochrome colors.

Another important note: not all CVPixelFormat types are supported. For example, kCVPixelFormatType_32RGBA is not supported when creating a CVPixelBuffer. To list all the supported CVPixelFormat types, you use the CVPixelFormatDescriptionArrayCreateWithAllPixelFormatTypes(_:) method.

The OSType is a four-char code type, which is a UInt32. To convert it to a readable string, you can use the following method:

Core Video framework doesn’t provide high-level methods to process or draw images. You can directly access the bitmap data using CVPixelBufferGetBaseAddress(_:) method. For example, you can iterate over the data as GBRA pixel values and do some mathematical calculations to it.

To enhance image processing capabilities, Core Graphics, Core Image, and Metal frameworks are highly recommended. When it comes to real-time high-frame rate processing, Core Image or Metal is always the preferred choice for image processing on the GPU.

Core Graphics

A CGImage is a bitmap image representing a rectangular array of pixels. You use CGImageSourceCreateWithURL and CGImageSourceCreateImageAtIndex to create a CGImage from a URL. Then you can retrieve the ColorSpace through its direct property.

Those creation APIs will read the ICC Profile embedded inside the file (for URL input source) or the binary Data (for Data input source) and create a CGColorSpace assigning to the colorSpace of the output CGImage.

If the embedded ICC Profile is wrong and you know it in advance (although it’s typically uncommon), you can create a new CGImage with CGColorSpace being replaced by a specified one.

Normally, it’s hard to get the ICC Profile wrong when exporting images, unless you do it on purpose. However, this

copy(colorSpace:_)method can help us understanding how to the color management work.

When creating a CGContext to draw your custom content, you will be asked to pass a ColorSpace:

The CGColorSpace passed when creating a CGContext means:

It’s the working Color Space of the

CGContext. If you draw sampled images that have different Color Spaces, color matching using the specifiedRenderingIntentwill be applied to make sure colors appear to be similar in different gamuts.It’s also the output Color Space when creating an output image using the

makeImage()method.The default Rendering Intent of a

CGContextis “default”: Core Graphics uses perceptual rendering intent when drawing sampled images and relative colorimetric Rendering Intent for all other drawing. You can use thesetRenderingIntent(_:)method to specify a Rendering Intent. Rendering Intents are the algorithms to use for mapping colors from the source gamut to the destination gamut. For more information about Rendering Intents, please refer to this documentation.

Core Image

Core Image is a high-level image processing framework to help us to process and save images with concatenation support. Typically, you create a CIImage from external sources like URL, Data, or underlying framework image objects like CGImage or CVPixelBuffer, and then use CIContext to trigger the render task.

Color Management is performed during the rendering of a CIContext. To understand the auto Color Management in a CIContext, we need to understand the scattered-around colorSpace properties in some APIs like CIImage, CIContext, and CIRenderDestination.

Input Color Spaces

A CIImage is served as input and output sources when rendering using Core Image. It’s just a lightweight recipe representing how the actual image data is constructed or processed. Heavy lifting occurs during the process of rendering.

There is a property named colorSpace in CIImage. The Color Space plays a key role in auto Color Management of Core Image.

You don’t assign or change the colorSpace of a CIImage instance. Normally, it can infer a CGColorSpace by the way it’s initialized. For example, as mentioned above, for a CVPixelBuffer with kCVImageBufferColorPrimaries_P3_D65 and kCVImageBufferTransferFunction_sRGB attachments, the CIImage ’s colorSpace will be assigned with Display P3 ColorSpace. For CIImage that constructed with CGImage, its colorSpace can also be inferred from the CGImage itself.

To override the colorSpace property when constructing the CIImage, you can supply additional options:

The example above is for demonstration only. Assigning a wrong

ColorSpacewill break the auto Color Management and will result in displaying inaccurate colors.

A CIImage doesn’t necessarily have color pixel values for display purposes. For example, for a CIImage served as a Depth Map or a Disparity Map, you can determine the depth value of a pixel from it, and no Color Management should be applied during the process. Those kinds of images should explicitly skip auto Color Management by assigning a NSNull color space to it.

You can specify the Color Space in the initializers that have the options parameter.

When creating a CIImage from bitmap data, you can directly pass nil to the colorSpace parameter.

Note: by specifying

CIImageOption.colorSpacewithNSNull()in the options dictionary, no color management will be performed for this image, even though the color space property may still be non-null. If you disable the color management for a CIImage created from a JPEG/PNG URL, thecolorSpaceproperty may still be non-null, which I suppose is an issue in the Core Image framework, and this issue won’t occur for a HEIF image.

When writing your custom CIFilter using Core Image / Metal Shading Language, in the apply(extent:roiCallback:arguments:) method of a CIKernel, in addition to pass an instance of CIImage, a CISampler is also accepted. When initializing a CISampler, you can specify a colorSpace to it. If you do so, color matching will be performed from the working color space to the target color space:

After passing some custom CIKernels or some built-in CIFilters, the

colorSpaceof aCIImagewill be nil, indicating that its implicit Color Space equals to the working Color Space, and no color matching should be performed, which will be discussed in the next article.

Working Color Space

CIImage is just a recipe to represent the process of how the input image is constructed and processed. No rendering process will be performed when you construct and chain several CIImage instances. A CIContext is what you need when you are ready to render the image.

Think of a CIContext as a choreographer; it manages the intermediate caches, creates a chain to process images (of course, this process contains auto Color Management), and renders to the output destination.

When you construct a CIContext, you can optionally specify the working Color Space of a CIContext. A working color space is used as the intermediate destination color space when performing color matching during the image processing chain.

You can specify the working color space when initializing a CIContext with the options parameter:

By default, the working Color Space will be extended Linear sRGB (tested in iOS 16-18 and macOS 13-15), which means:

It uses sRGB Color Gamut.

It’s Linear. So it assumes that the color values are already gamma-decoded.

It’s extended, which means that pixel values are stored as floating points and can extend beyond the range of [0,1].

Like the CIImage mentioned above, you can also pass a NSNull instance to indicate the working Color Space is nil and no Color Management should be performed during the intermediate operations.

Changing the working Color Space will affect how the image is preprocessed before processing the image. More details regarding the Color Management will be covered in the next article.

Output Color Space

The output Color Space of a CIContext affects the color space of the outputs. CIContext can render the final results in the following targets:

A

CGImageusing createCGImage(_:from:) method.A

CVPixelBufferusing render(_:to:) method.A

MTLTextureusing render(_:to:commandBuffer:bounds:colorSpace:) method.A Bitmap Data using render(_:toBitmap:rowBytes:bounds:format:colorSpace:) method.

Compressed / Uncompressed image data / file using jpegRepresentation(of:colorSpace:options:) , writeJPEGRepresentation(of:to:colorSpace:options:) or any other similar methods.

You may have noticed that not all render methods above have a colorSpace parameter, for those APIs that don’t let you provide a Color Space, the outputColorSpace option when initializing a CIContext takes place:

In other words, the CIContextOption.outputColorSpace will be ignored when you specify the colorSpace of the render target, like using createCGImage(_:from:format:colorSpace:). You can think of it as the default value of the output color space. Some methods like render(_:toBitmap:rowBytes:bounds:format:colorSpace:) allow you to pass a nil value to the colorSpace parameter, which indicates that no color matching will be performed when rendering to the output (Note: this behavior varies depends on the SDK that the app is linked against. For OSX 10.10 or earlier, a nil value indicates that the CIContextOption.outputColorSpace will be used, according to CIContext.h).

Addition to the methods mentioned above, there is a CIRenderDestination that provides an API for specifying a render task destination's properties, such as buffer format, alpha mode, clamping behavior, blending, and color space properties formerly tied to CIContext. You can think of those methods mentioned above are simplified or convenient versions for the CIRenderDestination API, since it provide so many customizations.

A CIRenderDestination represents a render buffer or surface, which requires a Color Space to interpret.

Different Initializers of CIRenderDestination accept various destination types, like the CVPixelBuffer , Bitmap Data and MTLTexture we mentioned above. After initializing a CIRenderDestination instance, its Color Space is first inferred by the destination type.

For example, for a CIRenderDestination with CVPixelBuffer, its Color Space can be inferred by its attachments. And for other destination types, the destination's colorSpace property will default to a CGColorSpace created with sRGB, extendedSRGB, or genericGrayGamma2_2, according to the documentation.

You can override the inferred colorSpace of a CIRenderDestination instance by setting the colorSpace property of it. You can also set it to nil to bypass the output color matching.

Metal

Finally, we come to the part where the most high-level frameworks are utilized: Metal. When it comes to image processing, we typically use Metal in those approaches:

Use Metal Framework-specific APIs like

MTLRenderPipelineDescriptorandMLTDeviceto define and create a render pipeline and commit it to the GPU and get the result.Work with other frameworks like Core Image or Metal Performance Shaders to simplify the process.

Either way, to process an image, you need to load the image as a MTLTexture. There are a few ways to do so:

Create your MLTTexture manually by using

MTLTextureDescriptor.Use

MTKTextureLoaderto load aMTLTextureby an URL or asset name.

There is no such Color Space property in the MTLTexture. Actually, there is no such Color Management process during a render pass in Metal: except that there is a concept called sRGB Texture.

An sRGB Texture is a texture treated as an sRGB gamma-encoded texture. When sampling the pixel value from this texture in a fragment shader, sRGB → Linear decoding will be performed automatically. Similarly, when writing pixel value to the texture in a fragment shader, Linear → sRGB encoding will be performed automatically. This ensure that the pixel values you deal with are in linear form. More information can be found in the 7.7.7 Conversion Rules for sRGBA and sBGRA Textures in Metal Shading Language Specification.

There are a few ways to specify a MLTexture as an sRGB Texture. When creating a MTLTextureDescriptor, you can set the pixelFormat value that ends with “_srgb”:

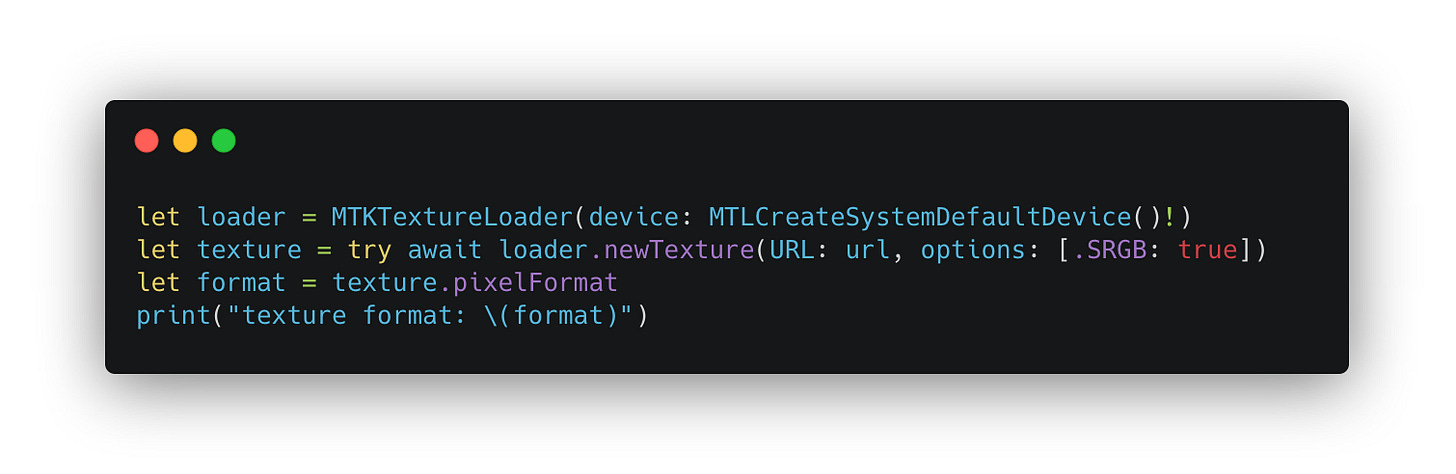

Or if you are using MTKTextureLoader to load from URL, simply add an option:

When loading a texture with

MTKTextureLoader, it’s our responsibility to determine whether it’s an sRGB texture. The sRGB defaults to false.

If we set the sRGB Texture correctly, we can safely assume that the pixel values we sample in the fragment shader are in linear form, and after doing mathematical operations, we don’t need to worry about the gamma-encoding.

Here are two examples of sRGB Texture and Non-sRGB Texture:

Fragment shaders in both examples simply divide the RGB pixel values by 2.

For sRGB Texture, when sampling from it, it will be automatically converted to linear form. Thus, the sampled result you get in the shader is in linear form.

For Non-sRGB Texture, no implicit conversion will be performed.

Treating the same RGB pixel values as different texture types and processing it using the exact same fragment shader will result in different values.

Afterwords

In this article, we delved into the details of Color Space information within the Core Video, Core Graphics, Core Image, and Metal Frameworks. As we conclude this segment, we look forward to the final of this series, which will concentrate on Color Management in Core Graphics and Core Image. Thank you for reading, and stay tuned for more insights!