Crafting a better snapping experience for Sliders

In the recent update, PhotonCam introduces the Interactive Zoom feature to help you seamlessly zoom the camera with this custom slider shown above. It’s a non-standard slider that you can just slider from one value to another, it’s a package of those following features:

There are scales in the slider, indicating the possible scale zoom factors.

The scales are discrete, and predefined.

There are some key scales that appear white in the slider. When the yellow indicator approaches the key scales at certain points, it will snap to that value, and you have to move your finger a greater distance to get rid of the snapping.

Yes, I know that the whole user experience is just like the Zoom Slider in the iOS Camera app. But recreating this experience is not that straightforward, but also not that difficult.

This blog will mainly focus on the snapping experience I’ve built for the Interactive Zoom Slider. First, I’ll talk about the most intuitive—but also the most brutal—way to achieve snapping. Then, I’ll introduce the algorithm I used when building the Interactive Zoom in PhotonCam.

To make the algorithm easier to explain, the following content is based on a simplified slider model. In this model, the slider contains continuous values ranging from 0.0 to 1.0, with 0.5 as the snapping point. Also, you should implement both the Slider UI and the gesture handling manually, since built-in sliders on some platforms come with their own gesture implementations, which is hard to tailor.

Intuitive but brutal approach

There’s an intuitive—but admittedly brutal—way to achieve snapping:

Define a threshold that snapping can occur.

When you receive the translation event and calculate the current translation value, you compare the absolute differences between the translation value and the value that suppose to be snapped into, right before assigning the translation value to reflect the UI.

If the absolute difference is less than the predefined threshold, the translation value should be set to the snapping value.

The initial state before gesture is depicted as below:

-t and t defines the threshold where the snapping should occur.

During the gesture, check whether the current translation is within the threshold ranges [-t, 0.5]. If not, do nothing and show the thumb based on the translation.

If the current translation is within the threshold range [-t, 0.5], then we simply snap the thumb to the snapping value 0.5.

For a new drag gesture, the user must move beyond the threshold value to exit the current snapping state.

The reason I describe this approach as brutal is that it discards some possible values—specifically, the continuous range between [-t, 0.5] and [0.5, t] can never be reached. If even small values can produce meaningful effects, this leads to a less-than-ideal experience.

Partial Nonlinear points-to-values mapping approach

Is there a better way to create a better snapping experience—especially for sliders with small scales that should remain accessible through dragging?

In the implementation of the Interactive Zoom Slider in PhotonCam, I’ve introduced a new method called the Partial Nonlinear points-to-values mapping approach.

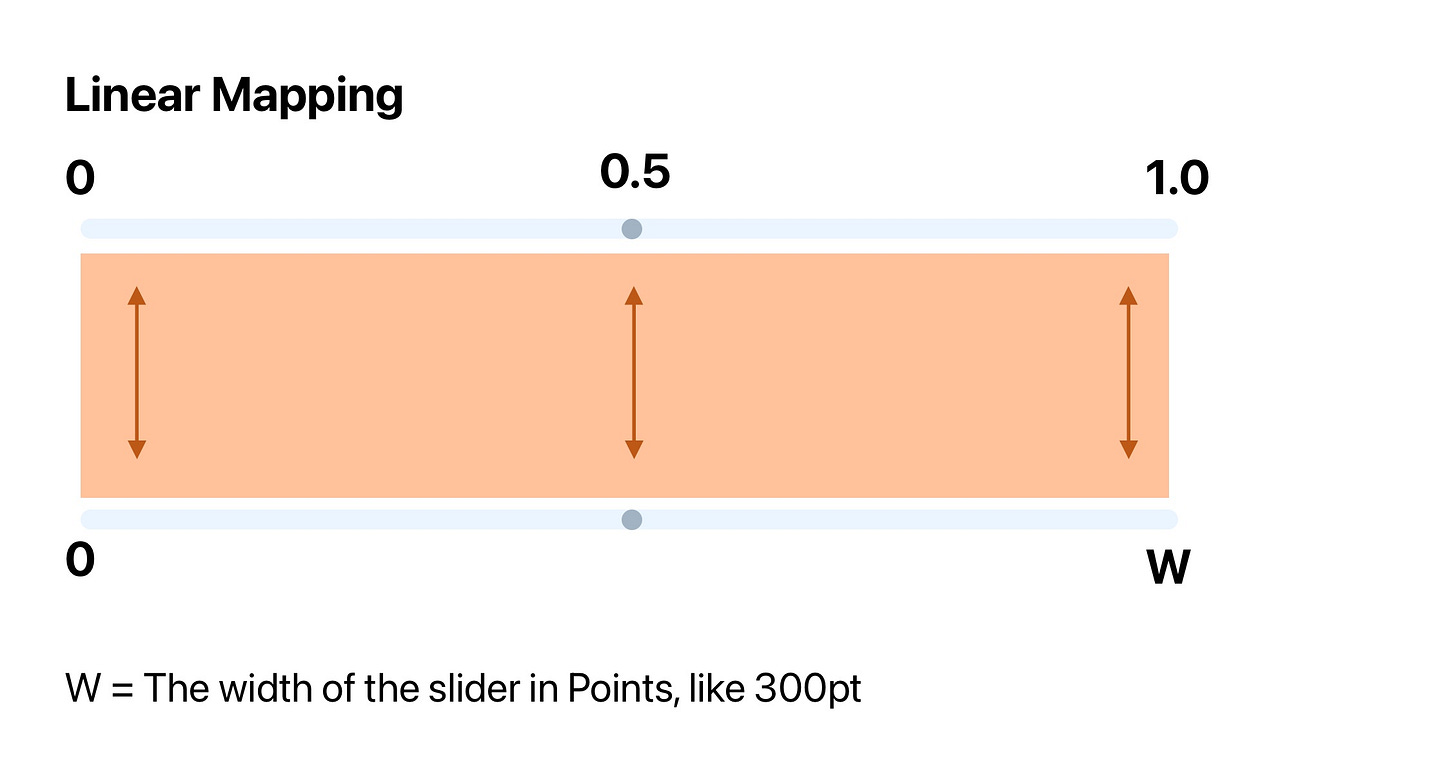

The major limitation of the previous method is that it relies on a linear mapping model:

It’s easy to map between the normalized values to the translation values in points. Consider the following pseudocode:

let translationXInPoints: CGFloat = ...

let normalizedValue: CGFloat = translationXInPoints / WLet’s try a different mapping model that is partial nonlinear.

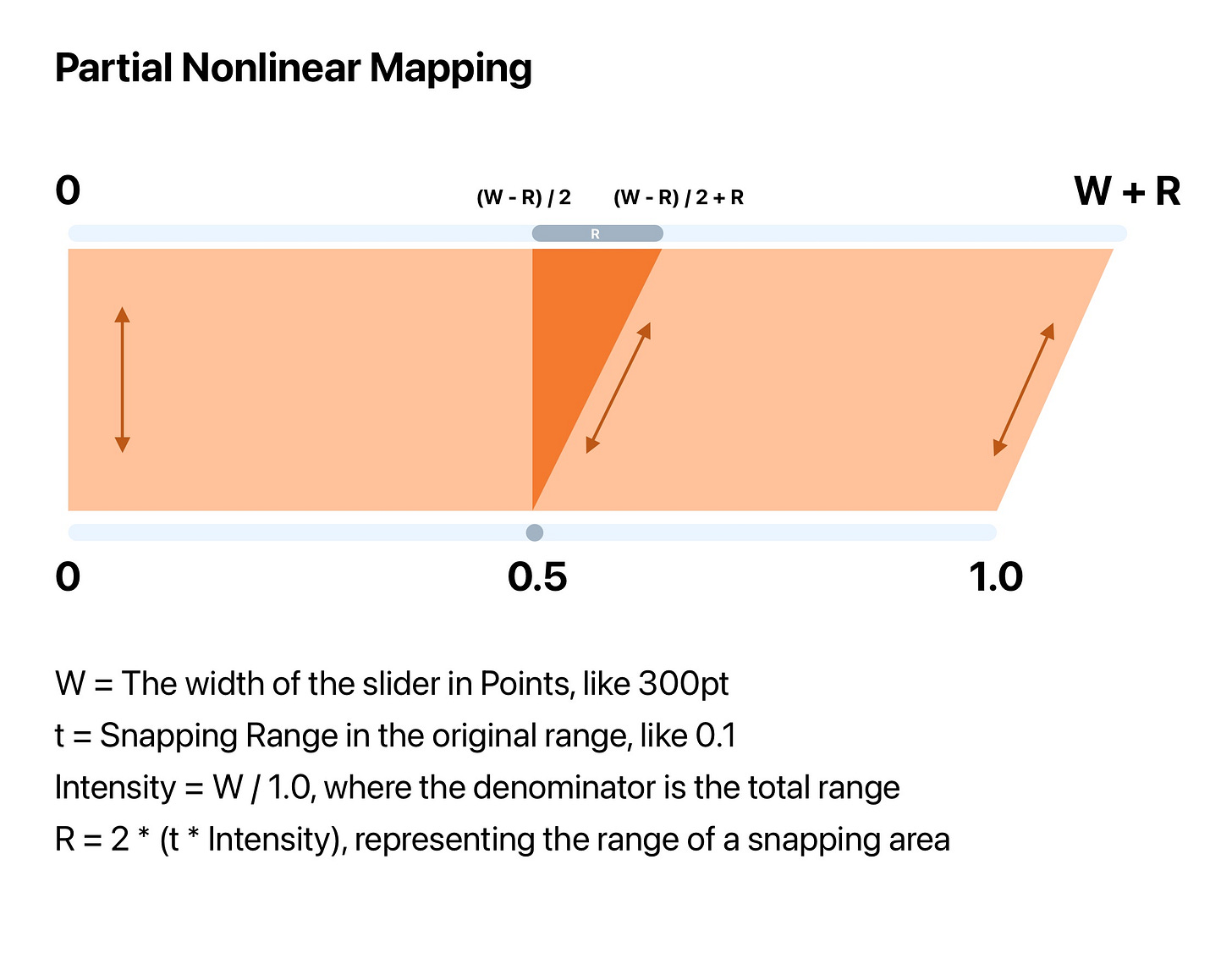

In this mapping model:

A range from zero to

W + Ris constructed, whereWis the width of the slider in points, andRis the total range considered as the snapping area.

If the current translation value falls within

[0, (W-R) / 2), then it should be mapped to[0, 0.5)in a linear way.

If the current translation value falls within

[(W-R) / 2, (W-R) / 2 + R), then it should be mapped to a fixed scalar value of 0.5.

Finally, if the current translation value falls within

((W-R) / 2 + R, W + R], it should be mapped linearly to[0.5, 1.0].

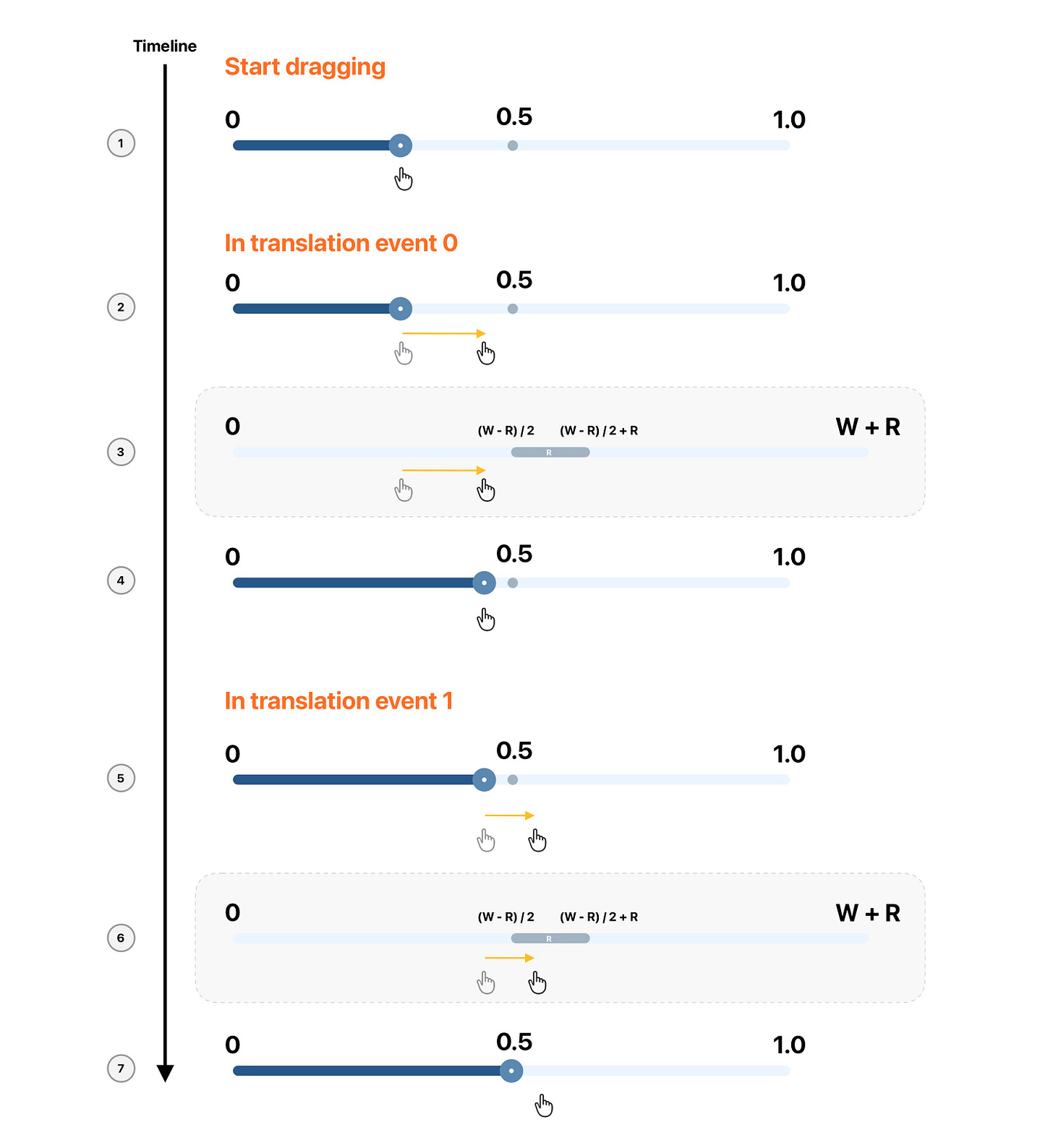

Here’s an example using the partial nonlinear approach. The events in the timeline are numbered to help you follow along with the corresponding notes:

A drag gesture is started, where the user places a finger on the thumb of the slider.

A translation event occurs. We calculate the

translationX—the difference between the currentoffsetXand the starting location—indicated by the yellow line.

Using the

translationXfrom Event 2, we increment the starting value. Since the current translation is still within [0, (W - R) / 2), a linear mapping is applied.

After the linear mapping, the thumb and the user’s finger remain aligned.

Without lifting the finger, the user tries to drag a bit further, which triggers a second translation event.

With this added distance, the current translation now falls within

[(W - R) / 2, (W - R) / 2 + R). In this case, the value snaps to 0.5.

Because the value is snapped, the thumb stays fixed at 0.5. You may notice that the user’s finger is slightly offset from the thumb—this is expected and intentional, since part of the translation range is absorbed during snapping.

This approach has one drawback, though: the position of the thumb in your slider and the user’s finger will no longer align after at least one snapping event. However, if you’re building a non-standard slider—like the Interactive Zoom Slider in PhotonCam or the one in the Camera app—this misalignment is completely acceptable.